Warning: It is important to know that computations are done inside your browser and not on the server.

Using improper scenarios, values in the parameters or erroneous scripts might cause your web page to freeze.

Be sure of what you are doing.

Tool bar

Overview

Toolbar is a collection of widgets that can be placed on the grid in order to create a scenario for the algorithms to solve.

By arranging those widgets you create endless possibilities of scenarios to test your algorithms and check if it performs well on edge cases.

How does it work?

With your mouse left-click on a icon to select it, then click on the grid to position the icon is cell.

Robot, representing the agent in a Grid World. It is Dynamic Programming method since all cells are computed, but it is advisable to be used in order to give a clearer view of the scenario.

Wall. It represents an inaccessible cell of state. Once encountered the agent can’t cross it, it has to find another way around it.

Lose state. Once reached the episode of the game or experience ends with a failure. Agent must at all costs avoid this state. This state gives a reward determined by Lose Reward parameter (see below).

Winning State. This is the target of the agent. It will do its best to reach this state. This state gives a reward determined by Win Reward parameter (see below).

In Tunnel. This tells that there is tunnel in this state, a sort of shortcut, that if entered will exit in one of the existing Out Tunnels, with equal probability.

Out Tunnel. It represents the exit or landing cell for the agent who entered one of the existing In Tunnels. Tunnels represents a sort of shortcuts between cells.

Up Wind. Once in an Up Wind cell, an agent is more likely to be pushed up, when it tries to leave the cell, no matter what actions he takes. This represents the hazardous behavior of the cell.

Down Wind. Once in a Down Wind cell, an agent is more likely to be pushed down, when it tries to leave the cell, no matter what actions he takes. This represents the hazardous behavior of the cell.

Left Wind. Once in a Left Wind cell, an agent is more likely to be pushed left, when it tries to leave the cell, no matter what actions he takes. This represents the hazardous behavior of the cell.

Right Wind. Once in a Right Wind cell, an agent is more likely to be pushed right, when it tries to leave the cell, no matter what actions he takes. This represents the hazardous behavior of the cell.

Positive Reward. This gives the agent a positive reward when it enters the cell.

Negative Reward. This gives the agent a negative reward when it enters the cell.

Control BAR

Run: Runs the current scenario using the default implementation. Any user-written script will be ignored. It has to be run using the ‘Run’ button beneath the Script Editor.

Reset: Resets the grid. It removes all widgets.

Download: Saves the current grid scenario, by downloading it as JSON file. This a way to save your work and reload it later on.

Open File: Loads an already saved file into the grid.

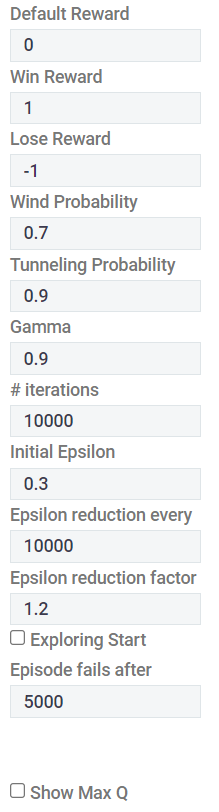

Parameters BAR

Default Reward: represents the awards that the agent gets by default by going from one cell to the other. By default, it is zero. If you need to change it, it is better to be negative otherwise the agent will tend to go in and out of the same cell in order to keep gathering positive rewards.

Win Reward: The reward that is gotten when the agent moves into the winning state. By default, it is +1.

Lose Reward: The reward that is gotten when the agent moves into a losing state. By default, it is -1.

Wind Probability: The probability that the agent is dragged in the same direction of the wind. By default, it is 0.7 (70%).

Tunneling Probability: The probability that when the agent reaches In Tunnel cell, it will be transferred automatically to one of the existing Out Tunnel cells. By default, it is 0.9 (90%).

Gamma: It is the discount factor. By default, it is 0.9

# iterations: Number of iterations to run the algorithm. By default, it is 10 000 but it can vary greatly depending on the algorithm. Be careful not to put a large number as it might freeze your page.

Initial Epsilon: The initial value of epsilon in an epsilon-greedy policy. This value means that the agent is allowed to explore its environment epsilon number of the time. However, this value may vary with the iterations and can be adjusted, using ‘Epsilon reduction every’ and ‘Epsilon reduction factor’.

Epsilon reduction every: Tells the frequency (in number of iterations) at which the epsilon is reduced.

Epsilon reduction factor: Determines the factor at which epsilon is reduced. The new epsilon will be the previous epsilon divided by this factor.

Exploring Start: In Monte Carlo method, exploring start indicates to the algorithm that it can start the search in any cell of the grid. This removes the need of exploring using epsilon-greedy policy, but of course it is an unrealistic assumption.

Episode fails after: Number of steps before canceling the episode. In episodic algorithms, there might be a time in which the agent does not reach a terminal state in a timely manner, and thus finishing the episode. This parameter tells the algorithm to abort the episode if it does not reach a terminal state after the indicated number of steps.

Show Max Q: Show the maximum Q value, instead of the state value.

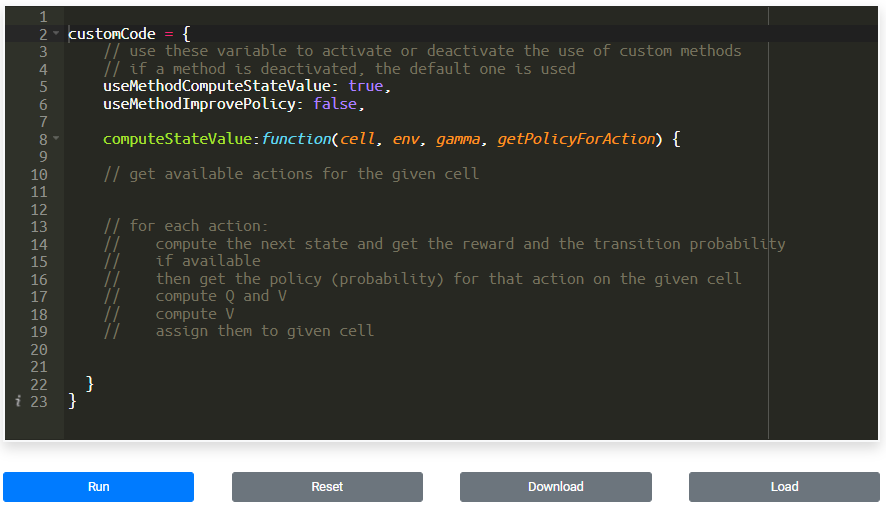

script editor

The script editor allows you to right and test your own implementation of the RL lab.

It uses javascript and gives you the flexibility to implement one or more methods of the algorithm while leaving the others to the default implementation.

In this manner, you will learn bit by bit, one step at a time while focusing and mastering a certain task or function.

To activate/deactivate your own implementation, you need to set variables such as ‘useMethodComputeStateValue‘, ‘useMethodImprovePolicy‘, …

Finally, you can run your code, reset it, download it to save it, or load a saved one, by using the appropriate buttons.

For more details about the object model to use in the script please check the Object Model page.